MHServerEmu Progress Report: April 2025

Here is another MHServerEmu Progress Report for you.

Current Status

Compared to the crazy ride of February and March, when a lot of features just started working of all of a sudden, April has definitely been a slower month, but things are still happening in the background. After implementing login rewards early in the month, I took a break, during which Alex continued to work on bug fixes, optimization, and minor improvements. When I returned from my break, Alex shifted to digging into the Unreal side of the game again, while I started working on the big server architecture overhaul I mentioned a number of times over the past few months.

Architecture Overhaul?

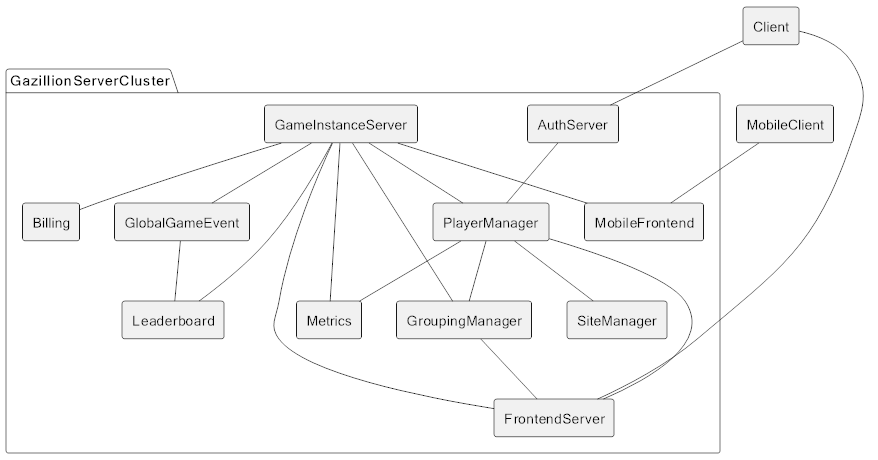

When we talk about server architecture, it is important to mention how MHServerEmu differs from what Gazillion had back when they were running the game. While MHServerEmu is a single monolithic all-in-one package that does almost everything, Gazillion had an entire cluster of servers that handled various aspects of the overall online service. Here is a diagram I did back in October 2023 based on the network protocol embedded in the client:

Based on our packet captures, at the very least GameInstanceServer (GIS) and FrontendServer (FES) were horizontally scalable, meaning Gazillion had more than one GIS and FES and could add more as needed.

This kind of architecture makes a lot of sense if your goal is to have a single centralized service and have it scale up to potentially tens and hundreds of thousands of players. However, the preservation aspect has been a top priority for me since the inception of this project, and having a single centralized service may be the worst way of preserving an online game, especially when you are talking about a game based on IP licensed from a notoriously litigious company. This is why I opted for a monolithic self-contained server approach: while it is not as scalable, it makes the server significantly easier to set up (effectively as easy as installing a mod for a single player game), and when you host it locally yourself, there is no practical way to “take it away” anymore.

Despite MHServerEmu being a single server, its architecture still mirrors Gazillion’s server cluster with functionality originally delegated to separate servers now running as “services” on different threads of the same process. Up until this point the vast majority of our efforts went into implementing GIS functionality, which is what handles practically all gameplay-related processing. Implementations of other services have been little more than stubs, doing just the bare minimum required to get a player into a game instance.

As the gameplay side got closer to a feature complete state, we have seen increases in player numbers on some of the more popular community-hosted servers, in some cases reaching hundreds of concurrent players. The existing implementations of many of these supporting services were practically just scaffolding, and some of them were creaking at the seams, because they were doing the work they were never really designed to do. One good example of this was our old implementation of the frontend server, which processes raw client connections and routes received data to various backend services. Because the old implementation was quickly hacked together to handle a locally connected client, it had issues handling data from unreliable connections, such as those that involved Wi-Fi on the client side, which resulted in some players being frequently disconnected from the server.

I did my best to do quick fixes as issues arised, which resulted in things like the current instancing solution you can see on some of the community-hosted servers. However, now that gameplay was in a reasonably decent state, it was time to take another good look at the supporting services and the overall architecture.

Frontend Improvements

Because we have been relying on quick fixes for non-gameplay related functionality so far, a web of technical debt started to accumulate, and it needed to be untangled.

The first area I looked at this month was the aforementioned Frontend. I have identified the following issues with it:

-

Other services depended on the concrete

FrontendClientimplementation. This made it impossible to, for example, do an alternative Frontend implementation that could potentially run as a separate process, if the need ever arises. -

The protocol of an incoming message depends on the current state of the connection, meaning that messages cannot be deserialized as soon as they arrive. Previously new buffers were allocated for every single incoming message to store them until they could be deserialized, resulting in increased garbage collection (GC) pressure and more frequent GC-related stuttering.

-

Packet parsing was not buffered, meaning that if a packet arrived in fragments across multiple data transmissions, it could not be parsed, and the client was forced to disconnect. This is the previously described Wi-Fi issue.

-

The authentication process was not implemented as strictly as it should have been, which gave some leeway to unauthenticated and potentially malicious clients.

-

The Frontend had no rate limiting, making it highly susceptible to potential DoS attacks.

Here is a summary of what I have done this month to address these issues:

-

Other services can now interact with the Frontend through an abstract

IFrontendClientinterface, which provides flexibility in how Frontend is implemented. There is still some remaining concrete frontend implementation dependency, primarily in the Player Manager service, which will eventually be cleaned up. The end goal is to make all services completely independent from the concrete frontend implementation we have. -

The entire message handling pipeline has been overhauled:

-

Message buffers are now pooled and reused.

-

Messages are now routed asynchronously on thread pool threads as they arrive rather than on a single dedicated thread, resulting in reduced latency.

-

Various smaller optimizations have been made, such as replacing

lockwithSpinLockwhen swapping queues and reducing the number of protocol table dictionary lookups.

-

-

Packet parsing has been completely rewritten to allow it to persist state between data receives and reconstruct fragmented packets.

-

The authentication process is now more rigid, and clients are disconnected when they attempt to do something unexpected.

-

The frontend now limits incoming data from each connected client using an implemenation of the token bucket algorithm.

These Frontend changes are now available in nightly builds. As expected, there were some early issues to iron out, like this one small mistake that lead to a single malformed packet from an unauthenticated client causing a total server crash with 3 GB of logs (oops). But now that they were taken care of, the Frontend is in a much better state in terms of performance, reliability, and modularity.

Chat Improvements

The next big area of technical debt we have is the Player Manager, which is a service that is supposed to load balance connected clients across different game instances and manage related features, like ensuring all member of a party end up in the same region instance. However, before meaningful improvements can be made to it, two main issues need to be solved:

-

Our old implementation of the service system allowed only very limited communication between services, mostly related to routing client message. This was insufficient for the Player Manager, which requires extensive bidirectional communication with game instances.

-

The chat command system, which dates back to August 2023 and is some of the oldest server code we still use, was tightly coupled with the existing concrete Player Manager implementation.

The communication issue was relatively easy to solve: we now have a more flexible event-like system, which can be extended with new message types as needed. We will be monitoring its performance, especially in relation to GC pressure under heavy load, but overall it seems to be doing the job we need so far.

The chat command problem is trickier to deal with however. Not only was our code left mostly untouched since August 2023, it was also heavily based on the command system from a work-in-progress Diablo III server emulator called Mooege, which was written back in 2011. The command system needed a total overhaul, which also required all command implementations to be adjusted accordingly. To make matters worse, in order to decouple everything from the Player Manager, we needed to overhaul our chat implementation, which was another overextended service stub.

The first order of business was improving the foundation, which was chat. Chat messages need to go through quite a journey to arrive to other players:

Client->FES->PlayerManager->GIS->Game->GroupingManager->Client

The key service in this chain is the Grouping Manager, which is effectively the “chat service”. Previously we immediately routed chat messages from games to the Grouping Manager, which was problematic for a number of reasons. First, the Grouping Manager has no access to game state, so it has no idea what players are “nearby” to filter recipients in say and emote channels, or what prestige level each player is at to color their names accordingly. Second, command parsing happened at the Grouping Manager level, so if a command needed to do something game-related, which is very often the case, it needed to go through the Player Manager to get a reference to a game that the client was in. This coupled the Grouping Manager, the command system, the Player Manager, and game instance management in a single messy blob.

To solve this, initial chat message handling now happens at the game level, which gives us the opportunity to include necessary game state data before forwarding them to the Grouping Manager, or even avoid forwarding altogether if the message is successfully parsed as a command. This in turn gives commands direct access to game instances that invoked them, which circumvents the entire roundabout trip through the Player Manager.

Thanks to this, proximity and region based chat channels now work as they should, and players on community-hosted public servers now have to use the Social, Trade, and LFG channels for cross-region communication, just like back in the day. One side effect of implementing proximity chat is that the Nearby tab of the social panel now displays nearby players:

There appear to be some issues related to updating the status of players in the Nearby tab, which may or may not be client-side UI issues. We will be further looking into it as part of the bigger community system update, which will include implementing other tabs and broadcasting player status data across different game instances. For now we still have some command system improvements to do.

Command Improvements

As part of this command system overhaul, I wanted to not only get rid of unnecessary dependencies on other services, but also make improvements to some long-standing issues with maintaining command implementations:

-

There was too much boilerplate code for validating invokers and arguments.

-

The existing help functionality was too inconvenient to use, and updating the command list in our documentation took too much effort to do manually, leading to it always being out of date.

My plan for tackling both of these problems was to make validation more data-driven and provide the necessary data to the command system using C# attributes, which we already used to define commands.

For example, this is what the !region warp command looked like previously:

[Command("warp", "Warps the player to another region.\nUsage: region warp [name]", AccountUserLevel.Admin)]

public string Warp(string[] @params, FrontendClient client)

{

if (client == null)

return "You can only invoke this command from the game.";

if (@params.Length == 0)

return "Invalid arguments. Type 'help region warp' to get help.";

// Implementation

}

And here is the same command with the current version of attribute-based validation:

[Command("warp", "Warps the player to another region.\nUsage: region warp [name]")]

[CommandUserLevel(AccountUserLevel.Admin)]

[CommandInvokerType(CommandInvokerType.Client)]

[CommandParamCount(1)]

public string Warp(string[] @params, NetClient client)

{

// Implementation

}

Not only is there slightly less copying and pasting boilerplate code, but now we can use the data provided in these attributes to automatically generate the documentation we need.

At the time of writing this the command system overhaul is what I am actively working on, and it should be ready to be rolled out relatively soon.

Looking Ahead

This is just the beginning for this architecture overhaul project. The remaining major goals for May include:

-

Overhauling the Player Manager, which would potentially include more frequent database write operations (i.e. less egregious rollbacks when the server is having issues), as well as the first round of load balancing improvements.

-

Integrating the leaderboard system implementation that has been sitting in a nearly finished state for months. This requires other architectural changes to be done first.

After finishing these, I will be shifting my focus to getting version 0.6.0 ready for release in early June, hopefully in time for the game’s 12th anniversary on June 4th. We will be talking about our plans for 0.7.0 and the updated roadmap to 1.0.0 at some point after that.

Time to go back to work, see you next time!